DiffusionRenderer: Neural Inverse and Forward Rendering

with Video Diffusion Models

https://research.nvidia.com/labs/toronto-ai/DiffusionRenderer/

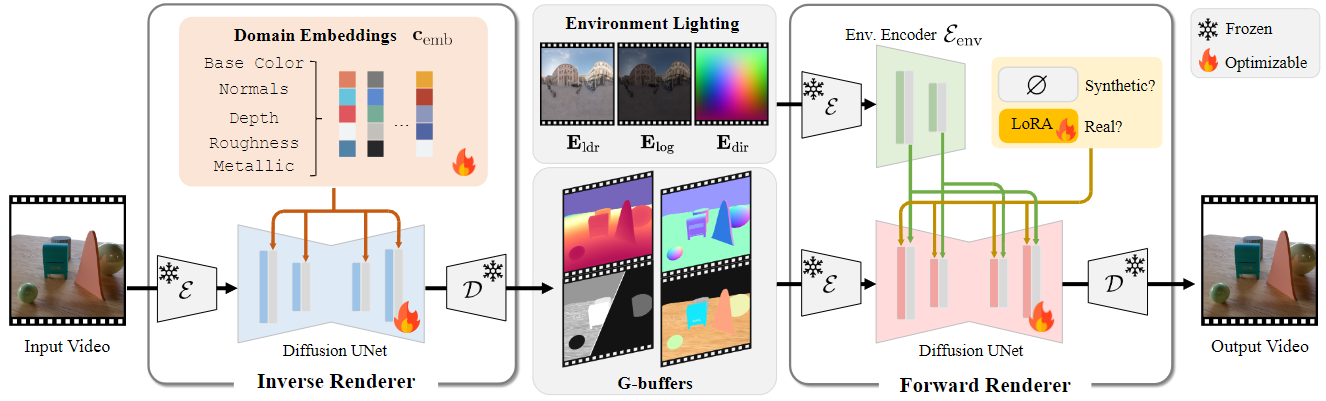

DiffusionRenderer is a general-purpose method for both neural inverse and forward rendering. From input images or videos, it accurately estimates geometry and material buffers, and generates photorealistic images under specified lighting conditions, offering fundamental tools for image editing applications.

Understanding and modeling lighting effects are fundamental tasks in computer vision and graphics. Classic physically-based rendering (PBR) accurately simulates the light transport, but relies on precise scene representations—explicit 3D geometry, high-quality material properties, and lighting conditions—that are often impractical to obtain in real-world scenarios. Therefore, we introduce DiffusionRenderer, a neural approach that addresses the dual problem of inverse and forward rendering within a holistic framework. Leveraging powerful video diffusion model priors, the inverse rendering model accurately estimates G-buffers from real-world videos, providing an interface for image editing tasks, and training data for the rendering model. Conversely, our rendering model generates photorealistic images from G-buffers without explicit light transport simulation. Experiments demonstrate that DiffusionRenderer effectively approximates inverse and forwards rendering, consistently outperforming the state-of-the-art. Our model enables practical applications from a single video input—including relighting, material editing, and realistic object insertion.